Critical values for these measures are, for two raters, around 0.15 for both AC1 and kappa: agreement measures above these values allow us to reject, with over 99% confidence, the null hypothesis that the marks are being allocated randomly. We consider Gwet's measure to be superior, as it more accurately accounts for chance agreement between markers (see Gwet (2001) for details). We also present Fleiss's generalised kappa measure (Fleiss, 1971) to enable easier comparison with other marking approaches. A good approach to comparing two markers is to use inter-rater reliability and, in particular, Gwet’s AC1 statistic (Gwet, 2001). The mean difference for the 70 diagrams was 0.34 with a standard deviation of 0.468. Most of the time, the human markers marked to the nearest whole number (as did the automatic marker). Table 2 shows the differences between the moderated human marks and the auto marks for the test set.

The trained marker is then applied to the remaining diagrams (the test set consisting of 70 diagrams) and the results reported for this test set. Following the approach adopted for ERDs (Thomas et al., 2007c), we determine suitable values for the thresholds using a training set of diagrams in this case the first 30 diagrams in our corpus. Thresholds are determined by experiment and represent limiting values for example, two objects will be considered the same if the measure of their similarity is above a certain threshold. The weights represent the significance, for marking purposes, of diagram features in a particular domain and are set by the user. The similarity measures are modified by certain weights and thresholds which have to be determined for the data set under test. We then compare the results of the automatic marker to the moderated human marks. We mark diagrams by comparing a student’s attempt with a gold standard – a specimen solution (there could be more than one acceptable solution) – to produce a set of similarity measures for certain diagram features known as minimal meaningful units (MMUs) (Smith et al., 2004) to which the mark scheme used by the human markers is applied. Of course, the user may not be able to fix the errors and can submit the syntactically incorrect diagram to the automatic marker which has to operate in the face of such errors. If a repaired diagram is re-checked, errors may still be detected, but these are the irreparable complex errors which the user should attempt to fix prior to submitting the diagram to the automatic marker.

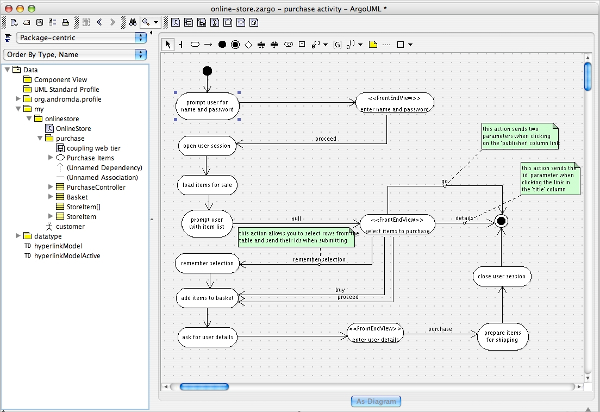

Other errors will be repaired on the basis of the most likely error – there are some patterns which occur frequently and have highly likely causes (see Figure 4). Simple errors, by definition, are those that can be repaired without changing the essential meaning of the diagram. Not all errors are repaired – only those that can be dealt with with reasonable certainty. Having identified errors, the tool can be asked to repair the errors (see Figure 3). A textual description of an error can be obtained by right-clicking on the erroneous element and requesting feedback as shown in Figure 2. The syntax checker identifies errors by shading (objects and activations) and change of colour (messages) – although this mechanism may change after usability testing. This facility can be disabled if required, for example if the tool is used in an exam. tool shown in Figure 1 incorporates a syntax checking facility (within the Tools menu).

0 kommentar(er)

0 kommentar(er)